Built for Scale.

Consistent, predictable and scalable.

Meet the A-list of Cloud Performance

and we can prove it.

We have more server capacity than anyone else and a smooth scaling process mandates a minimum 40% redundancy buffer at all times – all built on the industry leading Amazon Web Services.

This ensures that our services are not only reliable but also deliver exceptional performance, even during peak usage times – which is why Australia’s leading companies choose DataTools for their address capture and verification solutions.

Consistent performance at high standards.

Enhanced Quality Management Certification

By implementing ISO9001, companies can demonstrate their commitment to quality, efficiency, and customer satisfaction.

Engineered for

24/7 Reliability.

Set and forget

DataTools Server Specialists will look after all server updates including security patches and data file updates without you or your IT team needing to worry about anything.

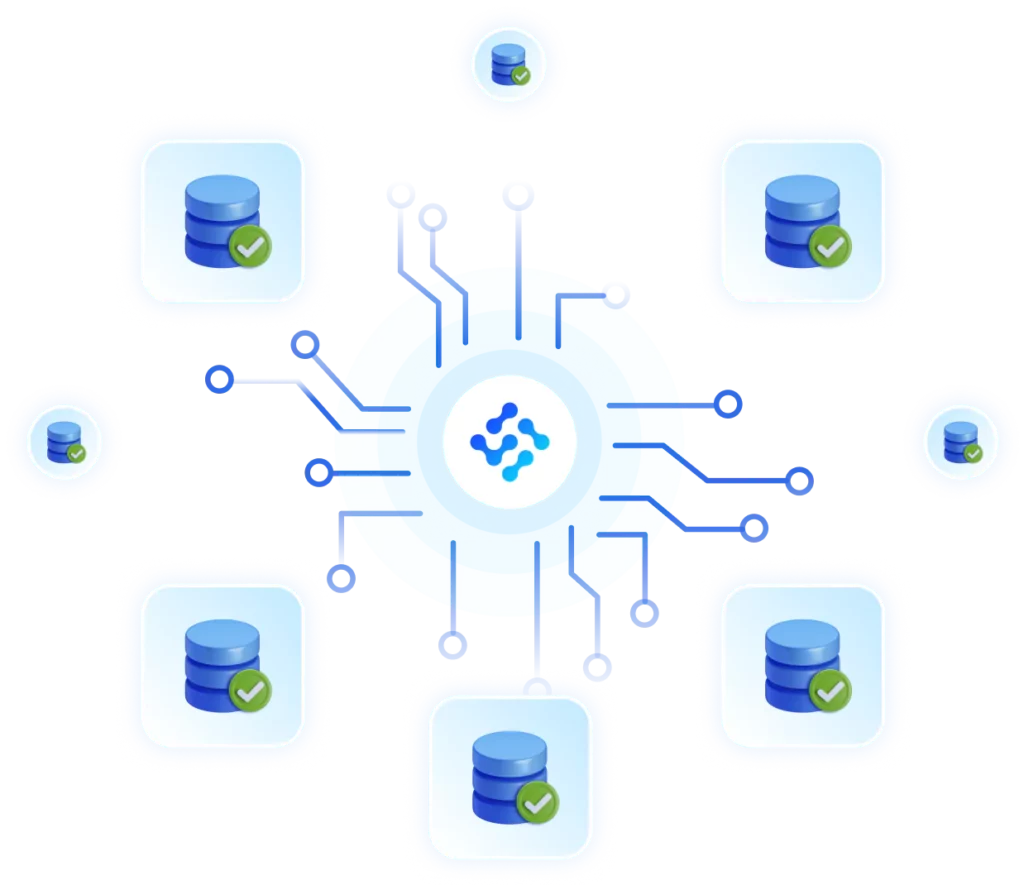

An architecture prepared for growth.

Capacity

DataTools Shared Server Clusters contain a minimum of 20 large servers in the Amazon data centre located in Sydney. For increased reliability the servers are evenly split over multiple isolated Availability Zones connected through low-latency links.

Scalability and Auto Scaling

DataTools constantly monitors server traffic and performance to ensure the base cluster size is adequately handling the expected performance requirements with power in reserve.