Let's Get Down and Dirty. Data Washing 101.

And this is all good stuff, but what happens when an update or a newly imported list doesn’t conform with an organisation’s standards?

In this case, the business needs to “wash” the list first before importing it. Let’s discuss the kind of ongoing maintenance needed for an organisation’s database.

Table of Contents

Getting down and dirty with washing!

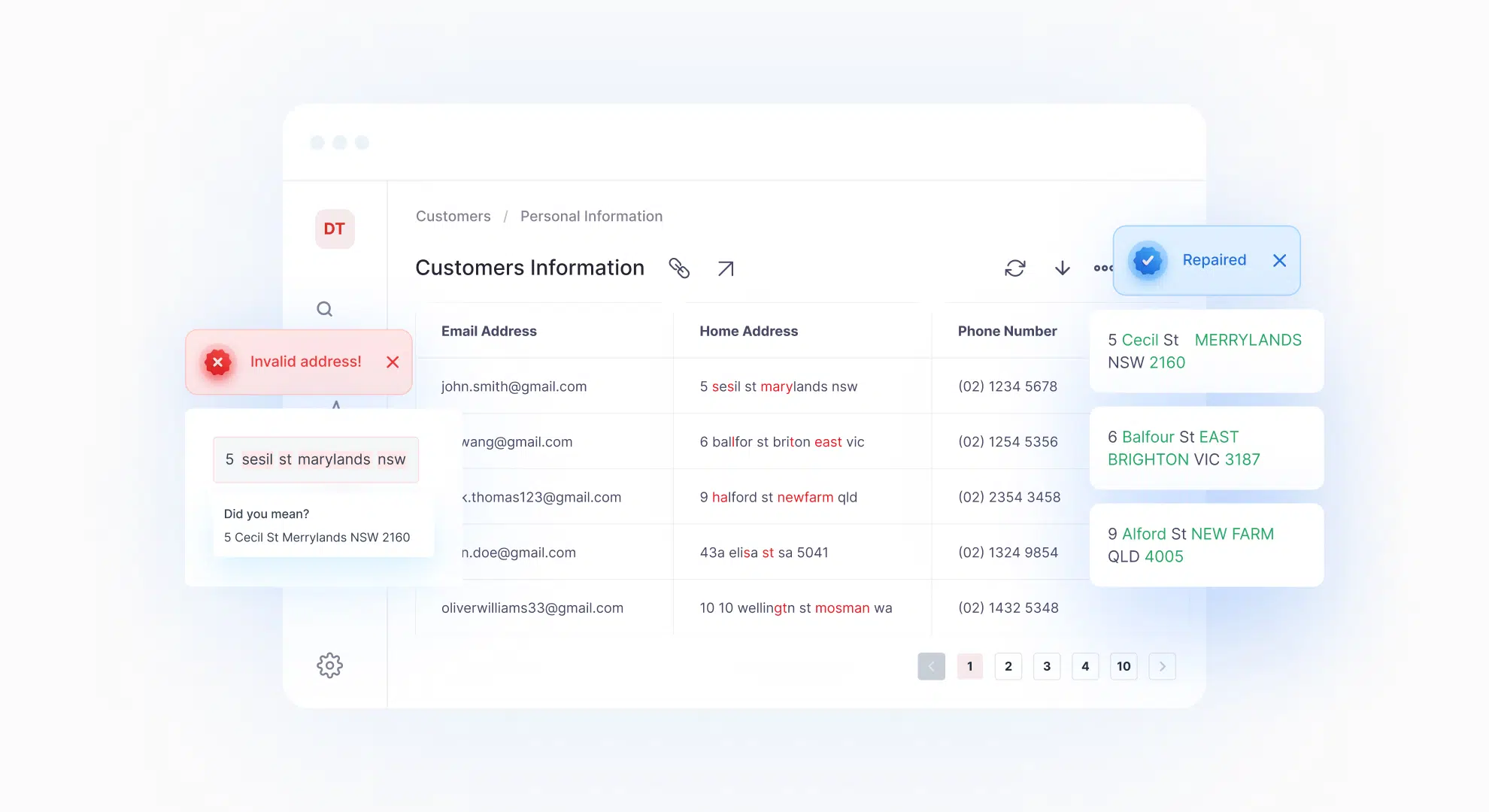

Data Washing: Taking an update or newly imported data list that does not conform with your database and normalising, conforming, and validating it so that you don’t have to worry about corrupt or inconsistent data down the line.

Washing lists is not a hard process, and is as easy as normalisation, conformation and validation.

- Normalise your incoming data to ensure compliance with the way you store data.

- Ensure that the data conforms to industry standards, such as AMAS.

- Validate that the above steps have been correctly performed before proceeding.

Once a business has these three steps covered, then they’ve already covered half of the fight to ensuring that their database does not contain corrupted or inconsistent data.

The next step they would want to take is to make sure their imports do not contain duplicates and that the information is distinct to what is already in the database.

One way that businesses can run this whole data washing process with ease is through validation products. When in need, find one that works with whatever database the business runs on (MS SQL, Excel, or Access) and discern whether an API or desktop version would be best for the job.

For like records (i.e. Smith and Smth), look for a product that can search and eliminate as needed. Most businesses will deal with records at either a Household or Phone Number level, but it’s often valuable to have multiple persons at a single household in case the business needs to target persons by Gender or select households where some form of union is in place. The point is that ideally, the chosen validation product should be capable of eliminating duplicates using different business rules, so that it can be tailored specifically to one’s needs.

Database example using DataTools Kleber to validate, cleanse and repair a CRM.

If you must take it out, do it when you can most afford to.

One challenge that data managers often face is the need to transform/cleanse data during “Off Peak” or “Out of Hours” times. The best way to tackle this would be to set up a process that can perform the task multiple times. This essentially makes the first time a practice run, while the second or third times are for keeps.

This allows data managers to spend most of their time setting up their processes, and then running it for the last time during the “Off Peak” times. This method ensures that an appropriate amount of quality control can be performed and mitigates any risk of error, because data can still be changed while working outside of the database. This iterative approach also facilitates learning from each run, enhancing the efficiency and accuracy of data processing with each iteration.